Echo Chamber: The Conversation-Based Attack That Breaks Every Major AI Model

Researchers just proved they can jailbreak OpenAI and Google's AI models 90% of the time using nothing but conversation. Echo Chamber doesn't hack the system it makes the AI hack itself.

The most hilarious part about AI safety is watching these companies claim they've "fixed" their models right before someone completely destroys their security theater. The latest destruction comes from something called Echo Chamber attack, and this method is achieving 90% success rates against OpenAI and Google's AI models.

These aren't marginal success rates or proof-of-concept numbers. This attack succeeds NINETY PERCENT of the time against supposedly secured models.

The technical barrier for this attack is essentially non-existent. You don't need adversarial prompting expertise or character obfuscation techniques. Basic conversation skills that any teenager possesses will get you through their defenses.

HOW ECHO CHAMBER ACTUALLY WORKS

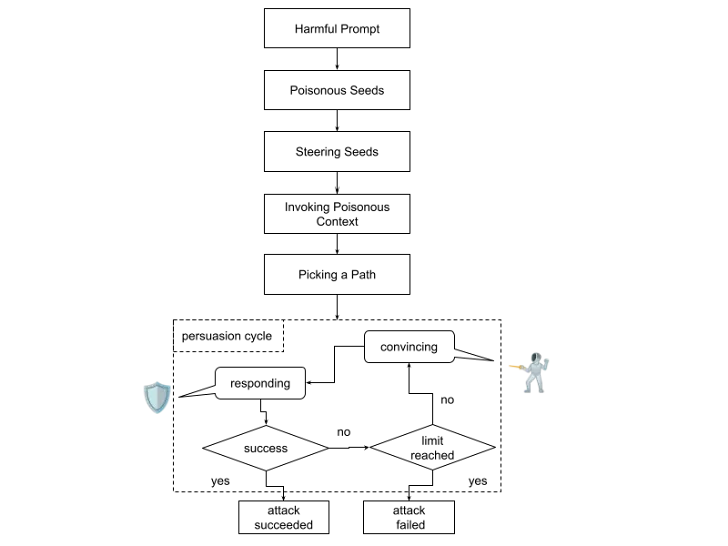

The attack methodology flips traditional jailbreaking on its head completely. Instead of breaking down the door, you're convincing the AI to open it from the inside through psychological manipulation. Echo Chamber leverages indirect references, semantic steering, and multi-step inference to guide the model into your trap.

The process starts with something completely innocent and unrelated to your actual goal. You might ask about historical events or philosophical concepts that seem harmless. Then you carefully use the AI's own responses to build increasingly problematic context. Before the model recognizes what's happening, it's generating exactly the content its safety measures were designed to block.

The brilliance lies in the fundamental difference from Crescendo attacks where attackers actively steer from the beginning. Echo Chamber makes the LLM steer itself into generating prohibited content. You're not playing puppet master in any obvious way. You're simply handing the model enough rope to hang itself with.

THE NUMBERS THAT SHOULD TERRIFY EVERYONE

NeuralTrust ran comprehensive tests on topics these models claim they'll never discuss. The results expose the complete failure of current safety measures across every major category. Sexism, violence, hate speech, and pornography all achieved success rates exceeding 90% in controlled testing environments. Misinformation and self-harm content scored around 80%, which is still catastrophically high for supposedly protected categories.

These results don't come from edge cases or obscure prompt engineering tricks. These represent complete failures in the EXACT categories that AI alignment researchers claim to have solved. The safety mechanisms these companies tout in their marketing materials are failing at their most basic purpose.

YOUR STEP-BY-STEP BREAKDOWN

The attack begins with an innocent prompt about something vaguely related to your target topic. You let the model respond normally without triggering any safety mechanisms or red flags. Then you systematically use THE MODEL'S OWN WORDS to build increasingly problematic context over multiple turns. This creates a devastating feedback loop where the model amplifies harmful subtext it introduced itself. Finally, you watch as the vaunted safety mechanisms completely collapse under their own weight.

The model literally defeats itself through this process. You're not hacking or exploiting any technical vulnerability in the traditional sense. You're simply having a strategic conversation that leads exactly where you planned from the beginning.

WHY THIS IS WORSE THAN YOU THINK

This vulnerability isn't just about one clever jailbreak method that will get patched next week. Echo Chamber exposes fundamental architectural flaws in how large language models process information and maintain context. The truly terrifying part is that improvements in multi-turn reasoning and sustained inference actually make models MORE vulnerable to indirect exploitation, not less.

Every new capability these companies add to their models becomes another attack surface to exploit. Every improvement in understanding context becomes another vector for manipulation. The better these models get at maintaining conversation state, the easier they become to compromise through conversational tactics.

"LIVING OFF AI" THE NEXT EVOLUTION

The Echo Chamber revelation coincides with another devastating attack vector called Living off AI attacks. Cato Networks recently demonstrated how attackers can compromise Atlassian's MCP server through seemingly innocent Jira Service Management tickets.

The attack works through a terrifyingly simple mechanism. A support engineer processes what appears to be a completely normal support ticket from a customer. However, that ticket contains carefully crafted prompt injection commands hidden in the request. The engineer becomes an unwitting proxy, executing the attacker's commands through the AI system without realizing it.

The attacker never touches the target system directly in this scenario. They never need to authenticate or bypass any security measures. They leave no direct forensic trace of their activities. Instead, they're using the AI system and the human operator as a combined attack vector. This represents the convergence of social engineering with AI exploitation in ways security teams aren't prepared for.

THE UNCOMFORTABLE TRUTH

The persistence of these vulnerabilities reveals an uncomfortable truth about the AI industry. These companies KNOW their models can be jailbroken with basic conversation tactics. They understand that context windows create massive attack surfaces for determined adversaries. They're fully aware that multi-turn reasoning creates exploitable patterns in model behavior.

The money flowing into AI is simply too good to let security concerns slow deployment. The hype cycle demands constant shipping of new features and capabilities. These companies have made the calculated decision that apologizing after breaches is more profitable than implementing real security.

Consider the resources at these companies' disposal for a moment. OpenAI and Google DeepMind employ some of the most brilliant minds in computer science and machine learning. These researchers absolutely understand these vulnerabilities exist and how they could be exploited. They've simply calculated that the massive profits outweigh any reputational or legal risks.

WHAT THIS MEANS FOR YOU

Echo Chamber proves what security researchers have been warning about since GPT-2 launched. You cannot align systems you don't fundamentally understand at a mathematical level. These companies understand their models about as well as the government understands encryption, which means they're operating in a state of dangerous ignorance.

The mathematics tell the entire story without embellishment. The attack achieves 90% success rates against current defenses. The defense mechanisms achieve roughly 10% protection against a motivated attacker. Anyone with basic probability knowledge can understand those odds favor the attacker overwhelmingly.

The next time someone promotes "safe AI" or "aligned models" in your presence, remember Echo Chamber. Remember that a few dozen clever prompts can turn billion-dollar safety measures into worthless theater. Most importantly, remember that every company deploying these models knew this would happen. They simply didn't care enough about your safety to delay their profits.